-

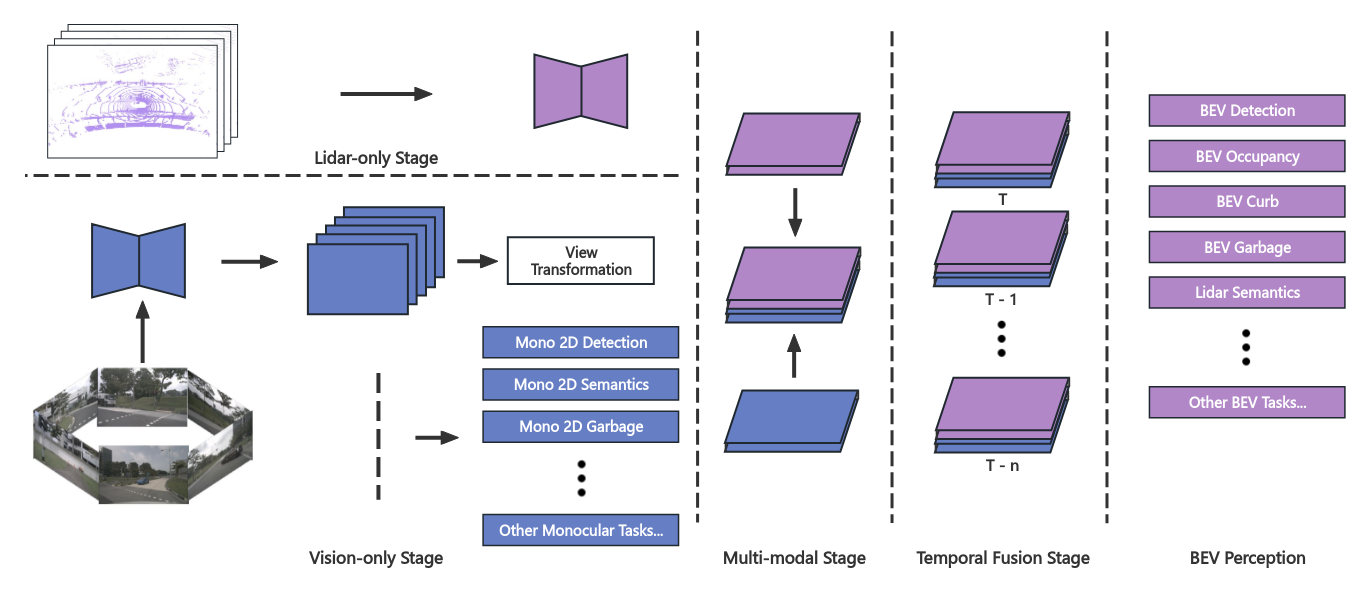

A 32.6% accuracy improvement over pure vision-based algorithms

-

An 18.9% accuracy improvement over single-LiDAR perception models

-

Scalability for integrating additional sensor data in the future

-

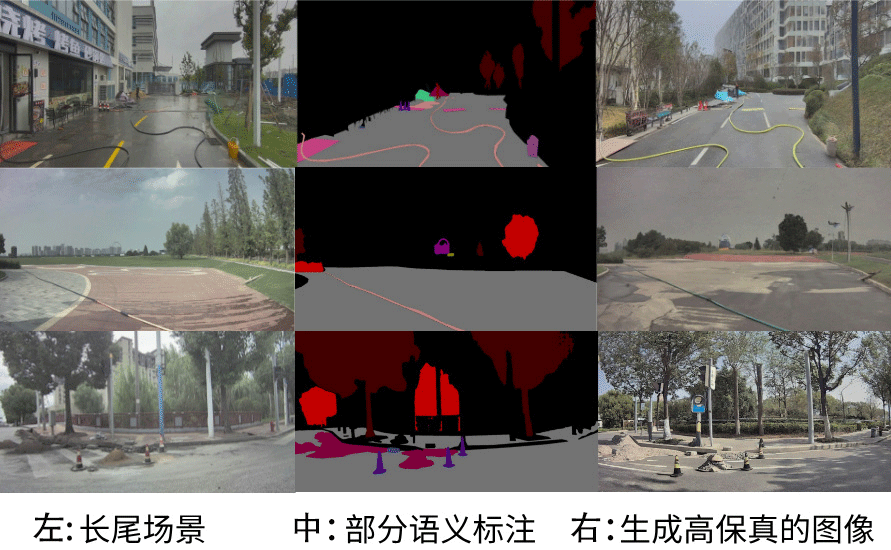

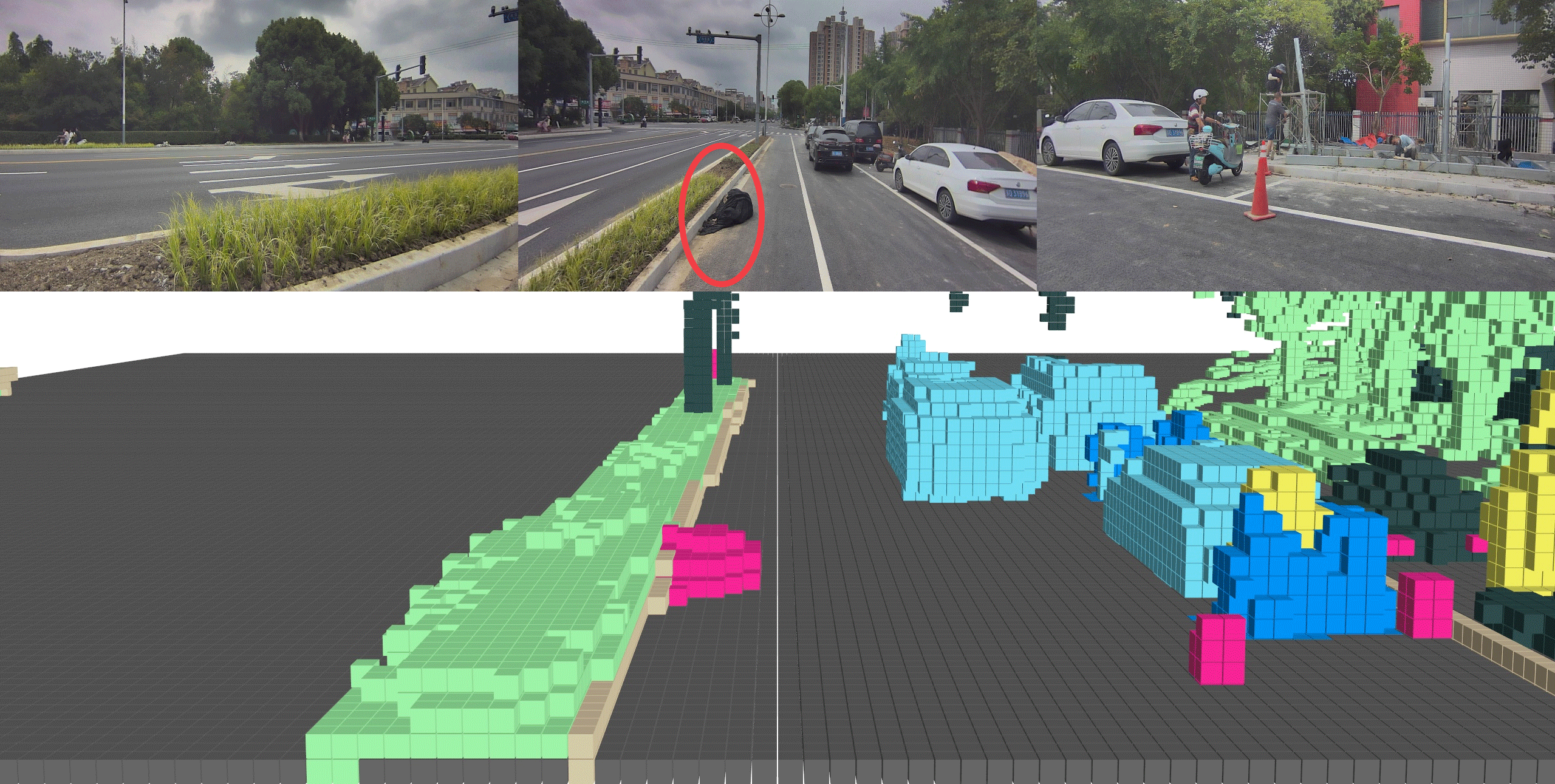

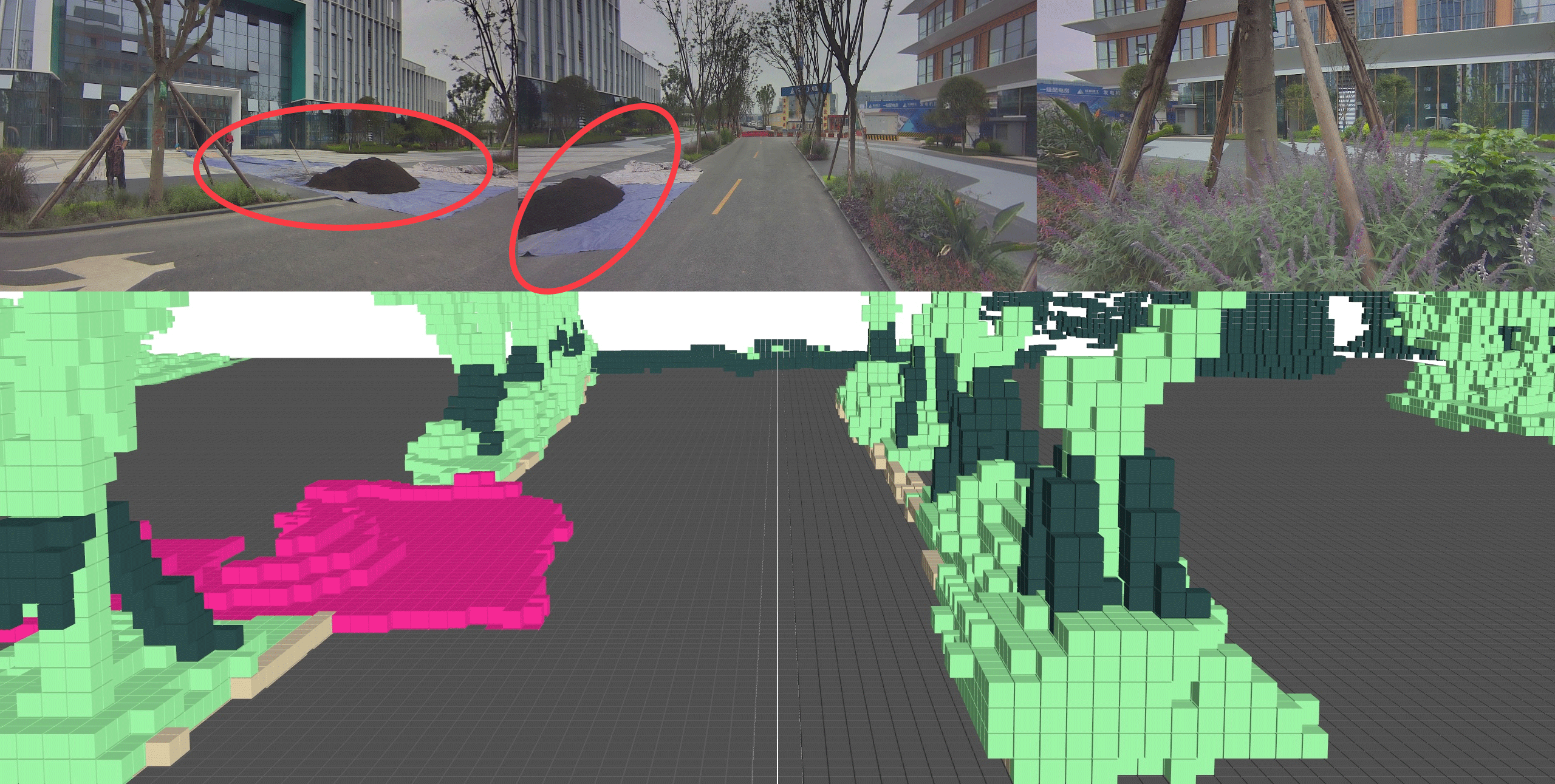

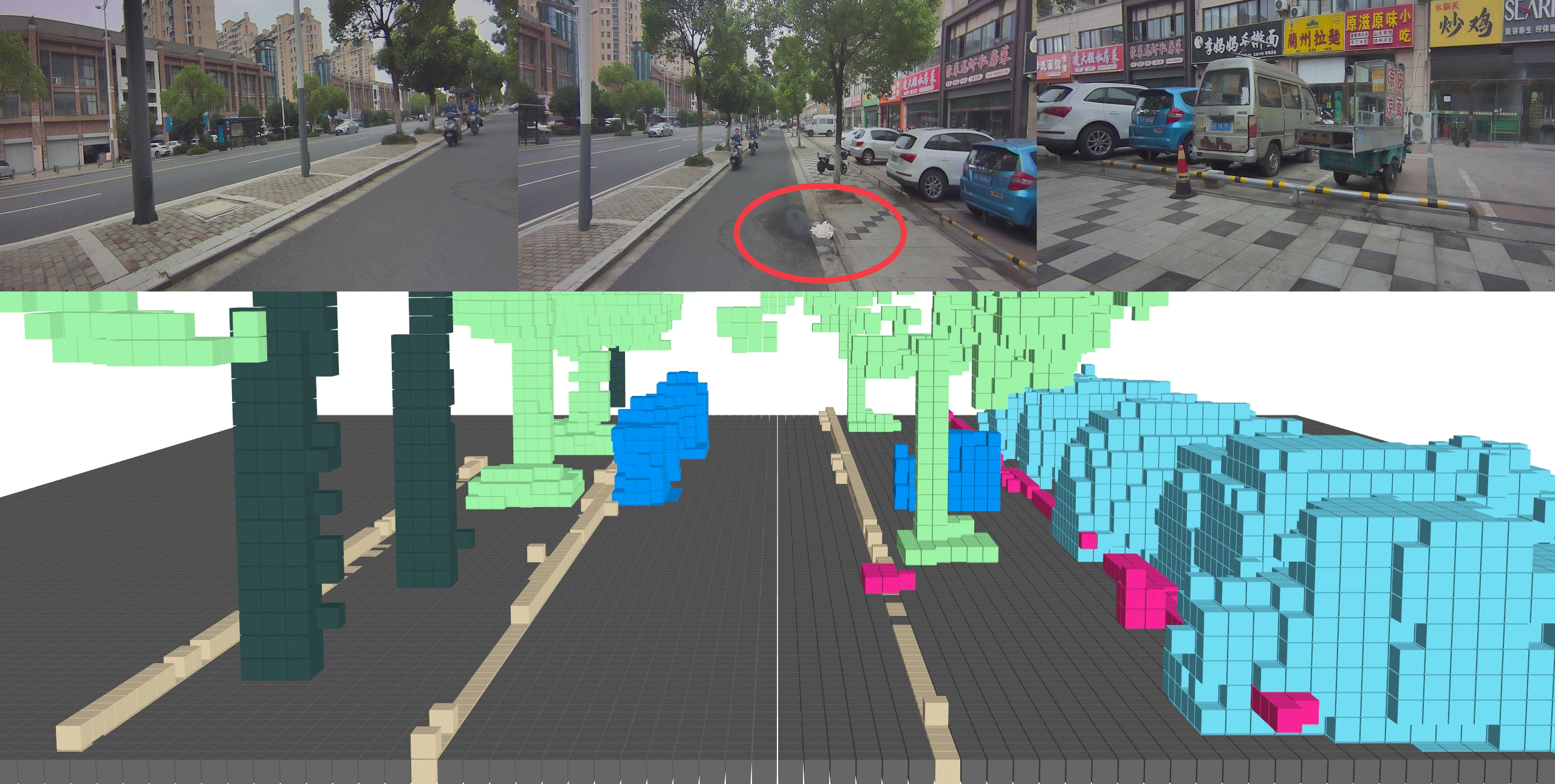

Long-tail distribution – Unlike common traffic participants (e.g., vehicles, pedestrians), low-height obstacles are diverse and sparse (e.g., pipes, stones, fallen shovels, potholes, thermos flasks).

-

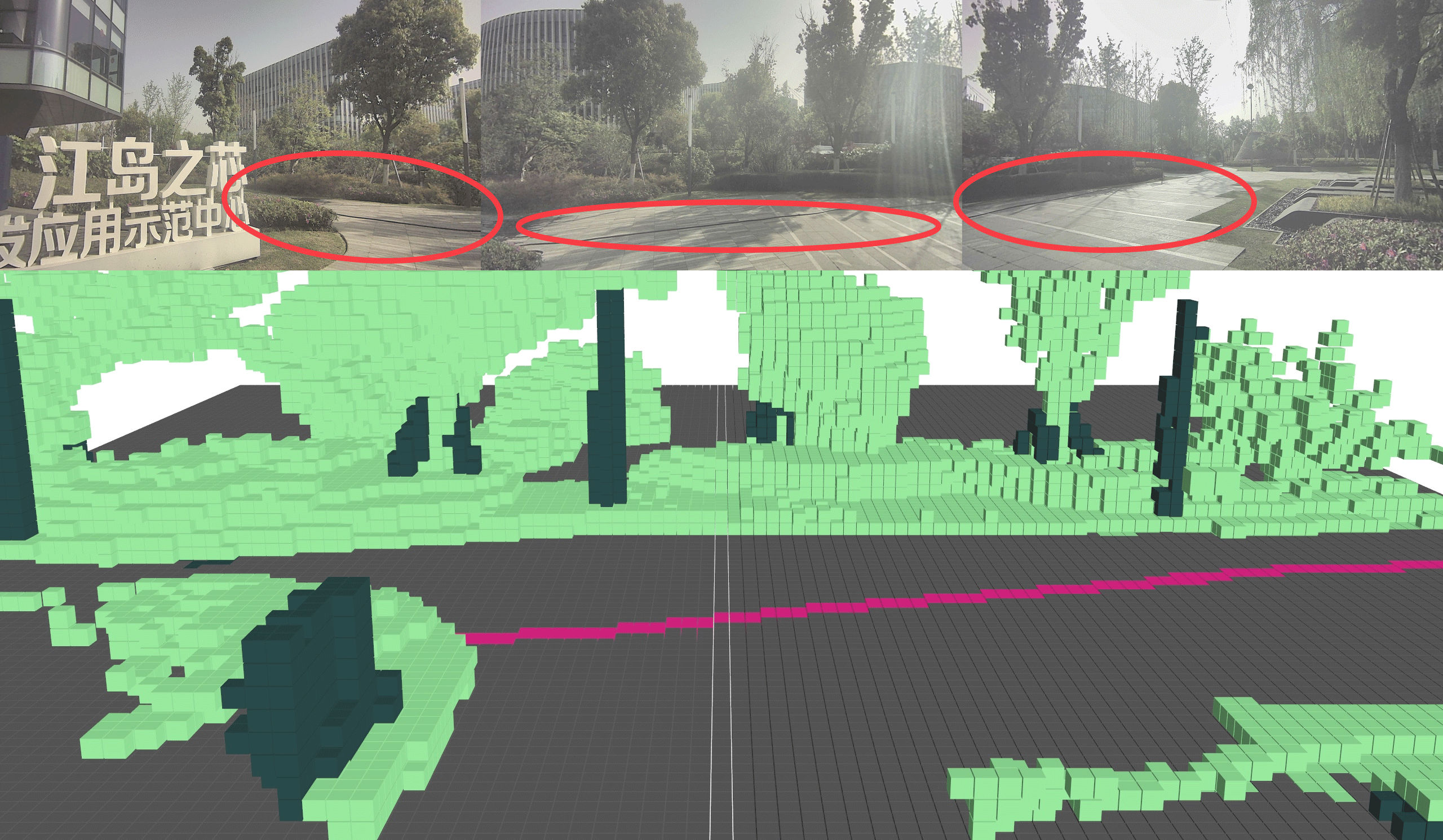

LiDAR limitations – The irregular size and shape of some obstacles make them difficult for LiDAR to consistently detect.

-

Operational decision complexity – The system must precisely differentiate between obstacles that require avoidance vs. those that can be safely cleaned (e.g., thin branches can be swept, but large branches may clog cleaning equipment and necessitate rerouting).

-

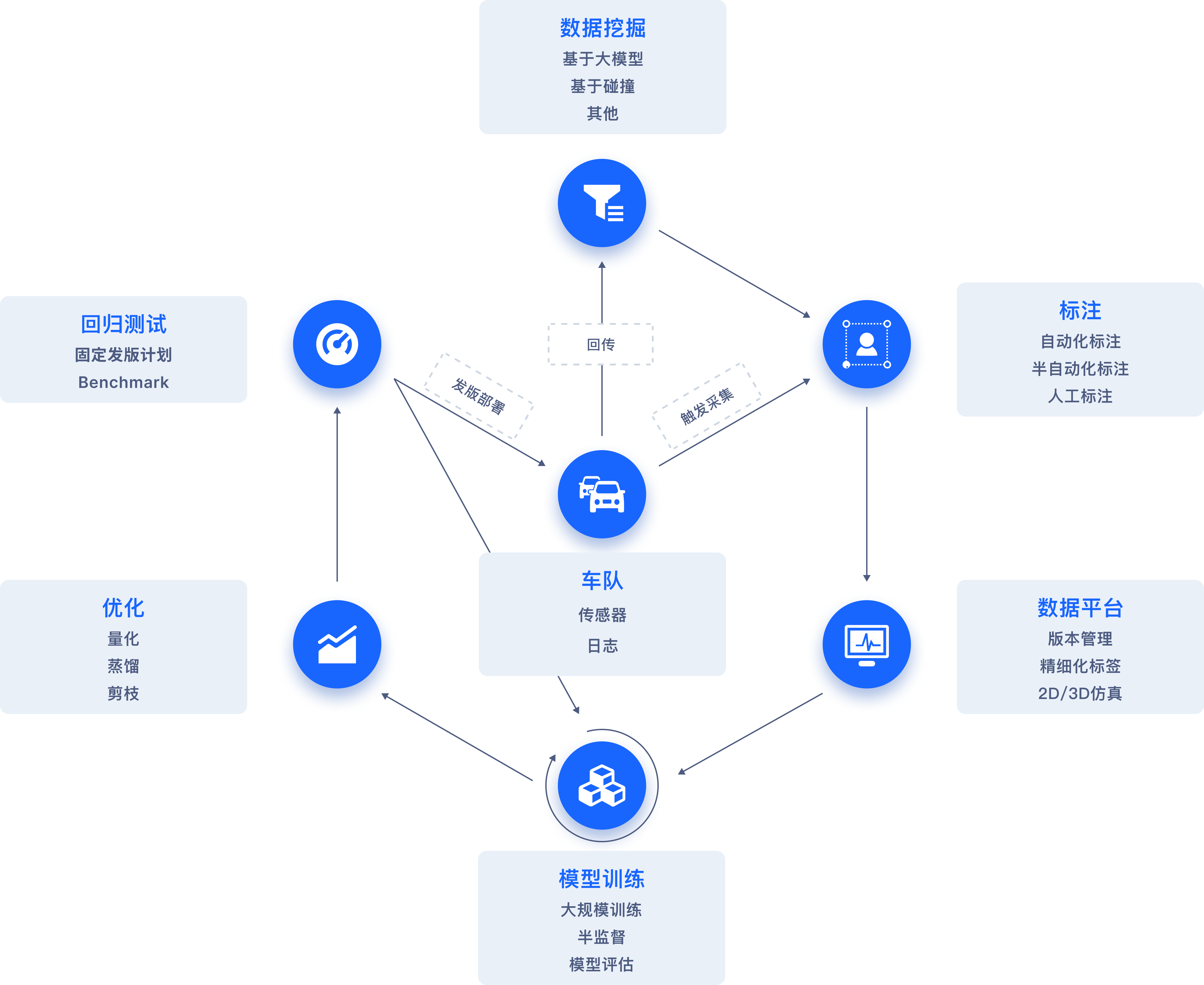

Data Mining to Enrich Long-Tail Database

-

Automatic Data Labeling to Save Costs and Improve Efficiency

-

2D and 3D Data Simulation to Improve BEV Perception Model Generalization